Sarah Petkus is a kinetic artist, roboticist, and transhumanist from Las Vegas, who designs electronic and mechanical devices which encourage reflection regarding the human relationship with technology.

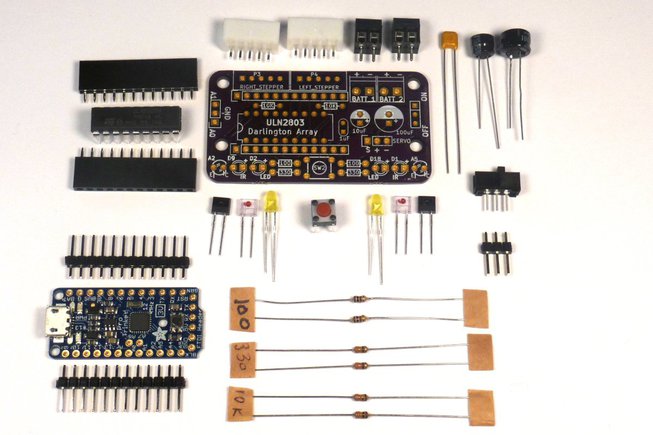

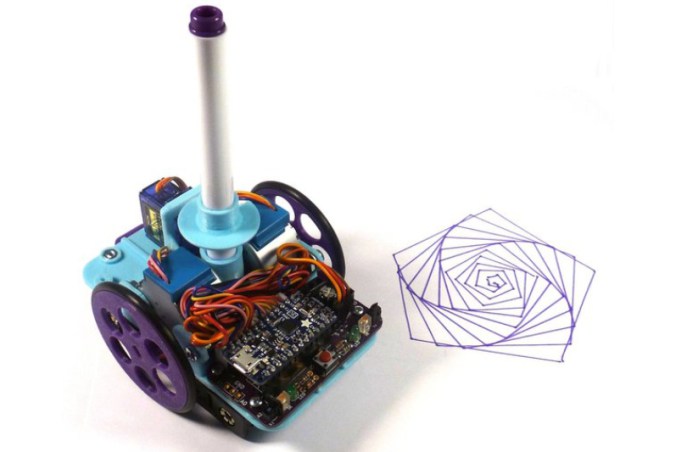

Their talk will be about a series of wearable augments built to facilitate in sensing, tracking, and indicating one’s level of excitement (or arousal)! Each of the wearables uses a variety of sensors as input to influence quirky electronic and mechanical devices of my design as output. The goal in doing so is not only to create a stellar suit of electronic armor (or amour), but also to help facilitate a dialogue about sex and intimacy amongst my peers that is relatable, honest, healthy, and fun.

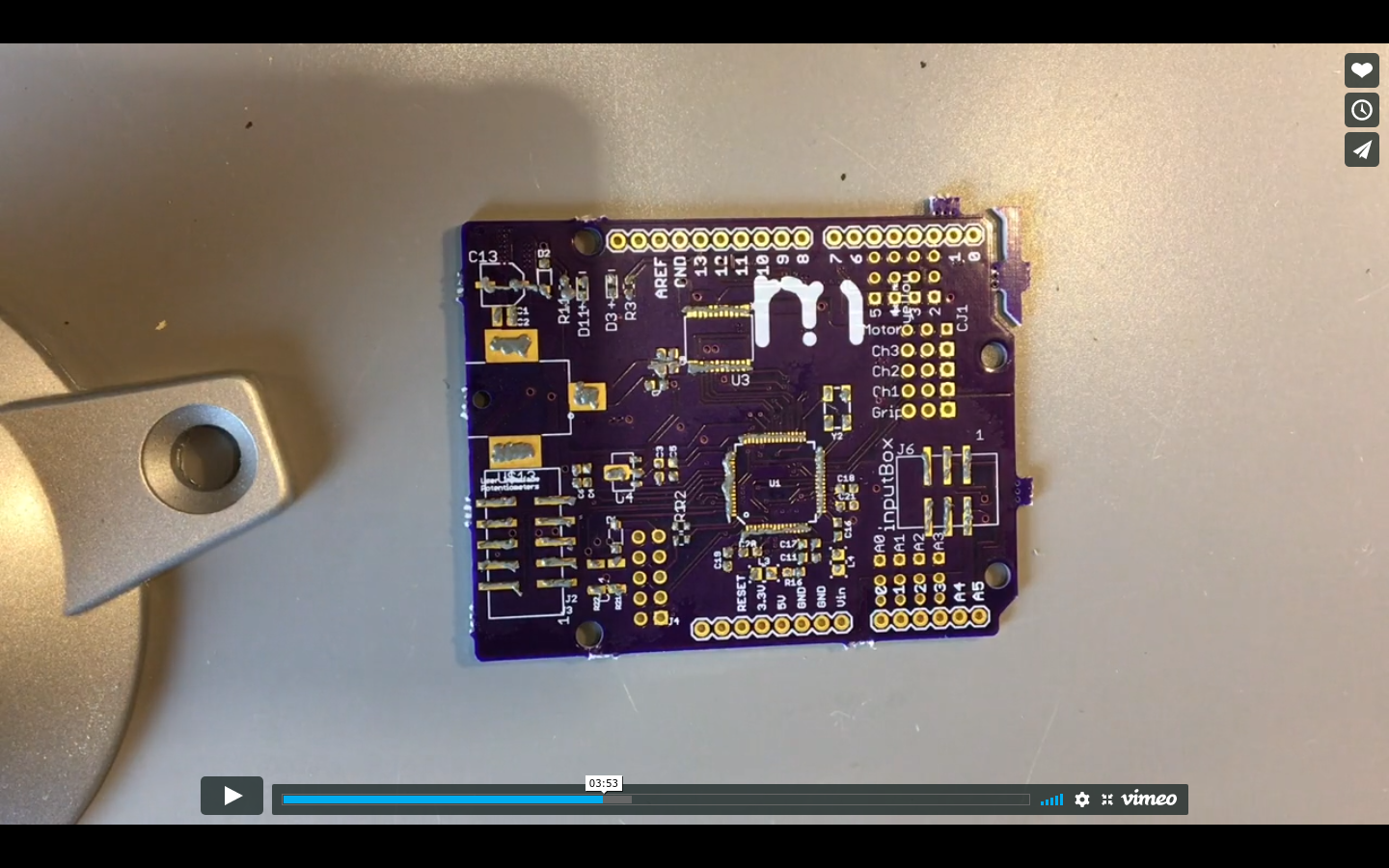

Ryan Cousins is cofounder and CEO of krtkl inc. Based in Silicon Valley, krtkl (“critical”) makes life easier for companies developing heavily connected and automated products. Ryan earned a B.S. in mechanical engineering – with an emphasis in thermodynamics and fluid mechanics – from UCLA. He has worked in both R&D and business capacities across a variety of markets, including medical and embedded, and has been granted a European patent.

After years of working on embedded systems, product development, manufacturing, and startup-ing, Ryan has had the “pleasure” of experiencing nearly every type of failure a hardware business has to offer. Ryan will share some humorous – and horrifying – anecdotes from his arduous journey, along with some key takeaways that will (hopefully) prevent others from making the same mistakes.

After the talks, there will be demos, community announcements, and socializing. If you’d like to give a 2 minute demo/ community announcement, please see the organizers when you arrive to get set up.

A community announcement includes looking for a project partner, a job, offering a project/ job, the announcement of your startup launch, your Crowdfunding pitch, etc.

We’re looking forward to seeing you Thursday, July 12th, at 6:30pm!